Dissecting Educational Disparities in 8th Grade Standardized Testing Amidst COVID-19

DATA 73000 Final Project

Nicole Baz, May 2024

The Research Question

How have 8th-grade standardized testing scores evolved across diverse student groups pre- and post-COVID-19, considering various intersectional identifiers, and what factors may explain any observed disparities?

The Audience

This project contributes to a better understanding of the impact of the COVID-19 pandemic on educational outcomes, and can help to address disparities in standardized testing performance. These findings could inform decision-making regarding resource allocation and policy interventions aimed at promoting equity in education. Key audiences include policy makers, educators and parents and students who are feeling the impact on learning.

The Project:

The Data:

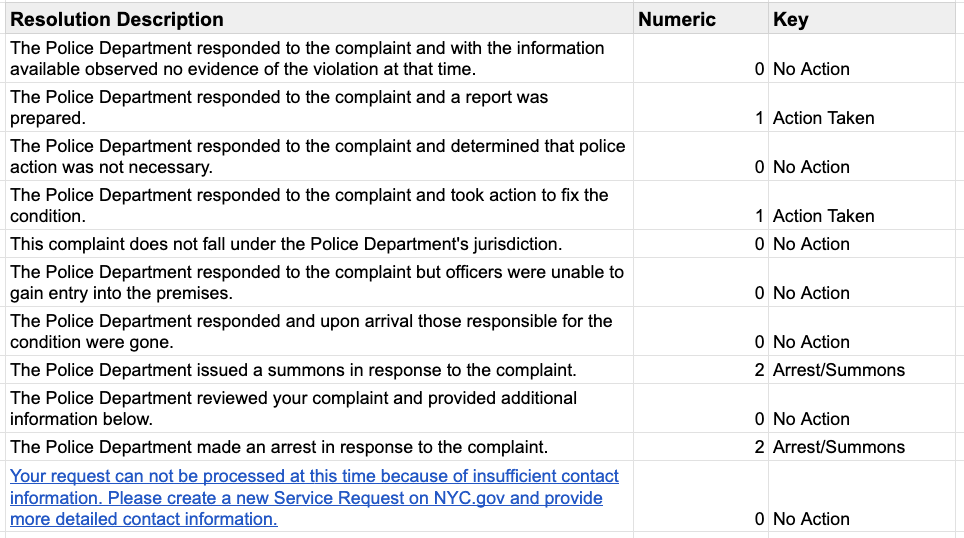

The dataset for this analysis was sourced from the Nation’s Report Card, specifically the National Assessment of Educational Progress (NAEP), administered by the National Center for Education Statistics (NCES) and the Institute of Education Sciences (IES). The dataset includes standardized testing scores for 8th-grade mathematics and reading in 2015, 2017, 2019 and 2022, split by the following demographic variables:

- State

- Race

- Gender

- Lunch program eligibility (proxy for family income)

- Disability status

- English language learning (ELL) status.

The data will be extracted in csv format from the NAEP Data Explorer. Data cleaning will involve standardizing variable names, handling missing data, and aggregating scores at the state and national levels. Additionally, a “learning loss” metric will be calculated by subtracting an average of 2015, 207 and 2019 scores from 2022 scores for each demographic group and state to assess the impact of the pandemic on testing outcomes.

The Visualizations:

This extensive visual report includes the following visualizations:

- An interactive map that shows student scores from 2015 to 2022.

- A bar chart that shows state-level scores compared to the national average across both math and reading and all included test years.

- Line charts to show trends in scores a different way, also with the option to compare a state’s average trend to trends by demographic group.

- Two bubble charts, one for reading and one for math, to see all “learning loss” data simultaneously. These charts allow you to see which subgroups were most impacted.

The visualizations can be found here or embedded below:

Design Rationale

My designs aimed to create a clear narrative view of this issue by starting “big picture” and drilling down into jurisdictions, demographics and variance from typical testing.

COVER PAGE:

As this data set is quite specific, I felt it best to begin with some context. The cover page introduces the rationale behind the project to prepare viewers for the subsequent dashboards.

MAP:

As these scores were pulled from “The Nation’s Report Card” (which uses an internal scoring system), I included information about that scale on the dashboard to make it clear what was being shown.

The decision to view scores on a map seemed like an intuitive starting place. I used the average scale score of all students in a given state as a metric to determine the fill of the states. The goal of this was to show where scores were highest and lowest across 2015 to 2022, and interestingly, revealed the major drop off in scores in 2022. This set the stage for future visualizations by priming viewers for this drop-off.

Please note: I excluded Puerto Rico from the Map visualization because it was an outlier in the data and detracted from the chloropleth effect by creating a biased color scheme.

BAR CHART:

Next, I wanted to present the scores in a ranked format so it was easier to see who was highest and lowest in any given year and subject. I colored the bars in the chart by percentile bands of 25%, and added in the “average” reference line to see how far above/below average a state was. This “average” line represented the national average scale score for all students tested.

LINE GRAPHS:

The line graphs were designed to highlight trends and also gives viewers their first opportunity to explore demographic group differences. While still focused on average scale score, by viewing these data in a linear format, viewers get the benefit of trendlines to begin to parse the impact of pandemic learning conditions on performance.

These charts also highlight score and trend differences within groups, and also highlight missing data. While NAEP randomly selects schools to participate in these assessments, there are a handful demographic groups are unrepresented in the data.

BUBBLE CHARTS:

Finally, I wanted to provide viewers with the most detailed view possible – everything at once. To do this, I created a metrics I called “learning loss” which took the average of the 3 pre-COVID test events (2015, 2017 and 2019) and subtracted that from the 2022 score for that group/subgroup. If the result was negative, it meant that that group scored lower in 2022 than “typical.” If it was positive, it meant they grew from their “typical” performance. These learning loss points were plotted on a bubble chart, with a reference line of 0.

This allows viewers to see:

- The spread of scores across demographics (i.e. Were all demographics groups in a state testing at about the same score range, or is there a wide spread of scores?)

- The groups who significantly grew in average scale score in 2022.

- The groups who significantly dropped in average scale score in 2022.

This was done for both Reading and Math, but as there was a lot of data included, I chose to annotate the most extreme plot points to point viewers to them.

Next Steps

Moving forward, I would like to include more subjects and grades. This project focused on one grade level and two subjects, which is a limited scope given the breadth of available data from NAEP. Further, incorporating other national assessments to attempt to corroborate these findings would be a meaningful step. This could also address the currently limited “post-COVID” testing data available through NAEP.

I also think it would be interesting to track a cohort over time. For example, in 4 years, how does this cohort of 8th graders do on their 12th grade tests compared to previous years? Are they still feeling the effects of pandemic learning, or have they gained back momentum? While “learning loss” is a hot topic now, interest in it is already lessening as we all move on from the unprecedented challenges of COVID-19. However, I believe that the generation of students who lost 1-2 years of in-school instruction will face new hurdles as we move forward.

In closing, this project was a good step in exploring these relationships, but is far from comprehensive. Further research must be done to continue digging into the intersectional effects of the pandemic on learning, and policies focused on creating and sustaining an equitable learning environment for those most impacted is essential.